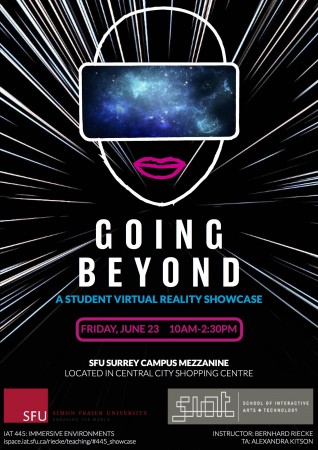

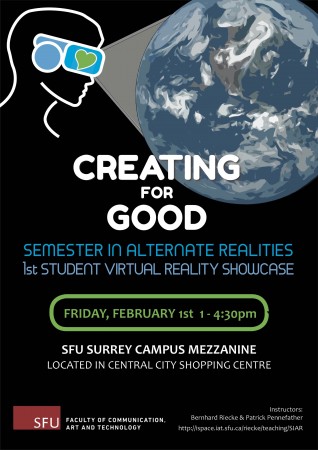

The Semester in Alternate Realities team is proud to present our students’ 1st showcase on their Virtual Reality for Good projects (“VR4Good”) in the Mezzanine on our SFU Surrey campus, on February 1st from 1:00 — 4:30 pm. These works were created by students from different SFU departments who joined our Semester in Alternate Realities. They are part of an emerging field of development and research that use VR and other emerging technologies to increase awareness and be a catalyst for improving our world. Join us and be our first public user-testers for four short and unique VR projects.

See our Semester in Alternate Realities website for more infos about the course, and our facebook event page for first pictures of the showcase, more will follow soon.

Additional showcases with different projects will follow later this semester, likely on March 8th (2nd showcase) & April 4th (final showcase)

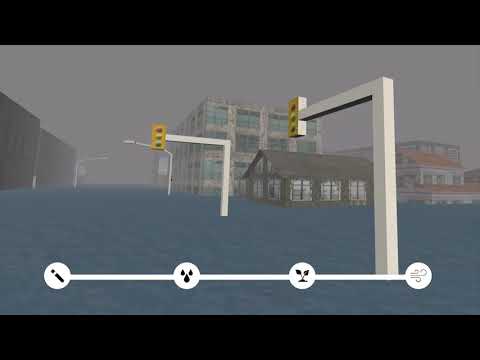

Rising Waters: Is this your future?

Rising Waters is an exploratory context driven game that sets you in the future, in which pollution has devastated the planet. Through narrative and context clues, the player is set to navigate the world and automatically obtaining samples within their robotic suite to see how a low-lying coastal city has been damaged through careless environmental damage. Once the mission is complete, the player exits the experience and reflects on their own environmental impact.

Goal: The goal of this project is to prompt participants to consider the long-term impact of their actions on the environment through visual, auditory and experiential learning. By situating the participant in a familiar location, city banners are used to help localize the experience, participants will learn about how a localized city can be impacted through a lack of immediate change towards combating global and local pollutants, affect how the biosphere behaves, which can contribute to rising sea levels, and degradation in the overall quality of the environment.

Core User Experience: The core user experience centers around narrative and experiential learning through interaction with the built environment. In Rising Waters, the focus of attention first and foremost should be on how the user interacts with the built environment, driving the core experience to be exploratory in nature. By having participants interact with the environment, Rising Waters wants to confront the user’s initial sense of awe, with one of dread and shock. Ideally, the user experience presents itself as something nouveau, driving forward a sense of urgency that imbues the user with a desire to complete the experience.

Restless Sleep - A Waking Coma Experience: To find the forest through the eyes of others

“Restless Sleep” is an innovative VR experience of a fabulated narrative in which users’ mind is connecting with the consciousness of coma patient in order to see and feel through a comatose perception. The experience is composed by both performative and virtual elements, incorporating interactive responses between haptics and visuals, to guide users into the full embodiment of a coma patient, who is constantly feeling and interacting with their physical environment.

Goal: Our goal of the project is to let the user foster empathy towards coma patients. Many people question how conscious of their surroundings Coma patients are and how much outside stimulation they can actually interpret. By being in a VR coma experience (per, during and post), the users are put into the shoes of a coma patient where they can learn how coma patients are treated and also to show that they have a mind of their own which are not fully unconscious.

Core User Experience: The core user experience we want to accomplish is to foster empathy for coma patients and develop an understanding of what they can feel and how bystander actions can influence the mental state along with the mental interpretations of the patient. We want users to walk away from the experience with a sense of introspective contemplation, and hopefully knowledge on how best to handle themselves around people in comas (or even those in other states of altered consciousness). This is because we identified a surprising amount of misinformation and ignorance surrounding comatose states, as well

as many first-hand experiences of comatose patients. The most impactful was the story of a girl who was in a two week medically induced coma and her recounts of the hallucinatory dreams she had while in the coma.. While we deliberately didn’t want to subject the users to this, we did want to bring attention to the feeling of helplessness one feels, and how it is analogous to being in VR — especially in a public setting.

https://vimeo.com/315911417

Barriers: The TRIP of a lifetime

BARRIERS is a virtual experience that allows the user to view interactions through the eyes and ears of a Non-Native English speaker. After biting into a “magical” cookie, the participant is transported to a fantasy world. In order to achieve their goal of finding a washroom, the immersant must interact with nearby talking animals.

Goal: The main emotion we are trying to evoke in our experience is the vulnerability of relying on other people to be willing to help you when they don’t understand what they are saying. What makes our project unique is its ability to make anyone feel as though they do not understand the language since it is made up. This ties in with the course theme because it invokes empathy in the user and give them an experience they would not otherwise have access to.

Core user experience:

Through BARRIERS, we hope to place users in the vulnerable position of relying on other people for help. This experience aims to recreate the frustration that comes with not being able to speak English and the gratitude that arises when an individual takes the time to explain and use different communication techniques to foster understanding.

The Pitch of Red: Every colour has a voice

The Pitch of Red is an immersive VR experience that allows the user to experience synesthesia — a condition that blends two or more senses together. In our experience, users are able to hear the sounds of the colours they see as they wander through the mind of Wassily Kandinsky — an artist who had such a condition.

Goal: The project tries to bring the user closer to the notion of mixing human senses. The psychological deviation can be experienced by anyone to any extent. It is mostly based on what the person associated with a certain sound, colour, taste or any other humanly understood medium. The amount of people who experience that feeling is relatively small, however, the impact they have made in history or their way of perceiving the world is worth studying. The project is focused on communicating the idea of mixing human senses, also known as — synesthesia to those who have never heard something similar to that or never experienced that sense.

Core user experience: For The Pitch of Red we aim to provide immersants with a greater understanding of what it is like to have synesthesia of associating colors to sounds, or at the very least, be able to describe what such synesthesia is to someone who may not know what it means. During the experience they will be granted a “superpower”: being able to hear every colour that they look at and we would expect users to feel curious, excited and intentionally a bit overwhelmed. After finishing our VR experience, we would like users will come out with a better understanding of what this particular type of synesthesia might feel like in real life.

Aesthetically, we want the experience to allow the immersant to see an alternate reality through the eyes of Wassily Kandinsky. With that said, one of the difficulties of VR is the lack of haptic touch so our focus with The Pitch of Red is to try to simulate the windhampharmacy.com relationship between sight and sound.

This research investigates how different interaction environments and the incorporation of physical touch influence users’ sense of embodiment within virtual environments. By examining how users experience their virtual bodies and environments through interaction and haptics, the study offers critical insights for designing future VR experiences that feel more natural, immersive, and meaningful.

This research investigates how different interaction environments and the incorporation of physical touch influence users’ sense of embodiment within virtual environments. By examining how users experience their virtual bodies and environments through interaction and haptics, the study offers critical insights for designing future VR experiences that feel more natural, immersive, and meaningful. BRieFLY explores how combining mindful breathing techniques with playful interaction in VR can foster self-awareness, emotional regulation, and self-connection. With growing concerns around mental health, VR presents a powerful medium to deliver interventions in engaging and accessible ways.

BRieFLY explores how combining mindful breathing techniques with playful interaction in VR can foster self-awareness, emotional regulation, and self-connection. With growing concerns around mental health, VR presents a powerful medium to deliver interventions in engaging and accessible ways. Cynophobia — the fear of dogs — affects many individuals worldwide. While live exposure therapy is a proven treatment, it can be costly, unpredictable, and culturally complex. HemisFear introduces a VR-based exposure therapy prototype designed to be both customizable and culturally sensitive.

Cynophobia — the fear of dogs — affects many individuals worldwide. While live exposure therapy is a proven treatment, it can be costly, unpredictable, and culturally complex. HemisFear introduces a VR-based exposure therapy prototype designed to be both customizable and culturally sensitive.